How to handle anti-scraping measures when collecting data

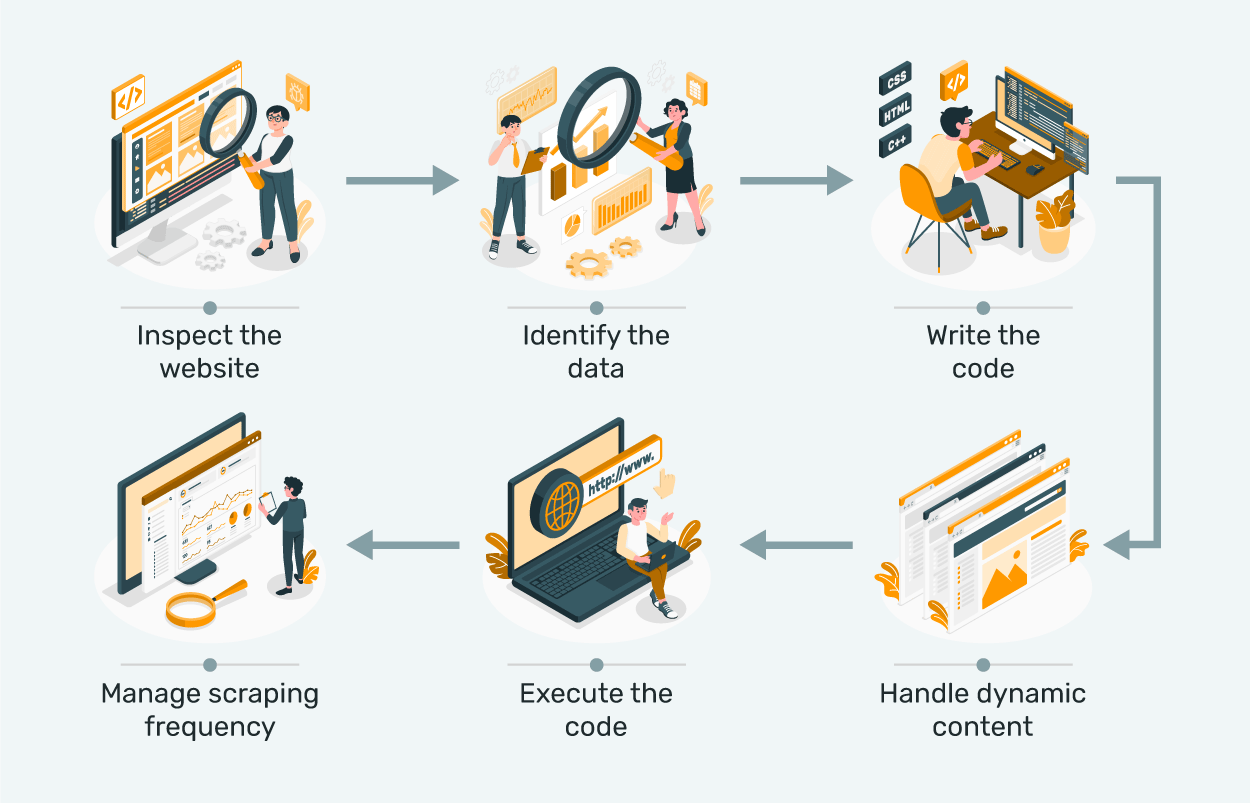

As a web scraping service provider, we understand the importance of collecting data efficiently from various websites. [get more info at duotlabs.com] However, many websites… Read More »How to handle anti-scraping measures when collecting data