Although web scraping is a legitimate business practice, sometimes web pages may not allow data extraction. The most common reason for this is the fear that high amounts of requests can very often flood a website’s servers and, in some extreme cases, cause a website to crash. Other sites block scraping based on geolocation concerns, for example, content copyrights limited to specific countries. Whatever the reason for the blockage, it is important to understand what blockages currently exist and how to overcome them. Here are some of the most common website blocks and solutions:

An IP (Internet Protocol) address designates a unique number, temporarily or permanently assigned to a computer connected to a computer network using the Internet protocol. To illustrate the principle, it’s like your telephone number which is unique. A number is associated with one and the same person, like the IP address of a computer device.

The blocking: Sometimes websites will block you based on the location of your IP address. This type of geolocation blocking is common on websites that tailor their available content based on the customer’s location. Other times, websites want to reduce traffic from non-humans (eg, crawlers). Thus, a website can block your access depending on the type of IP you are using.

The solution: Use an international proxy network with a wide selection of IP addresses in different countries using different types of IP addresses. This allows you to appear as if you are a real user in the desired location so that you can access the data you need.

IP throughput is measured between the DSLAM and the network user. A DSLAM is equipment located on the local operator’s network whose function is to route and transmit data from or to ADSL subscribers by grouping on a single medium. Rate limiting simply means that the switch limits traffic on the port to prevent it from exceeding the limit you set. If the rate limit you set on the port is too low, some issues may occur degraded video stream quality, slower response time, etc.

The blocking: This type of blocking can limit your access based on the number of requests sent from a single IP address at any given time. This can mean 300 requests per day or ten requests per minute, depending on the target site. When you exceed the limit, you get an error message or a CAPTCHA trying to find out if you are human or machine.

The solution: The best and only way to bypass traffic restrictions and blocks is to use a residential proxy service like those from OceanProxy. The need is clear, the solution is clear, upgrade to OceanProxy today to boost your network throughput.

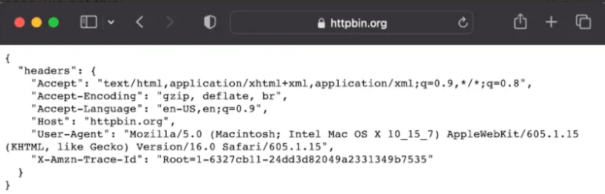

A user agent is a code that every web browser sends when connecting to a server. This code allows a website to know, among other things, the browser and operating system used by Internet users.

The blocking: You can have your IP address blocked if analysts suggest you are a bad bot. For example, if you request spam from a target site during your web scraping, you risk being blocked.

The solution: There are two main ways to bypass rate limiting. First, you can effectively limit the maximum number of requests per second. This will slow down the crawl process but can help circumvent throughput limitations. Second, you can use a proxy that rotates IP addresses before requests hit the target site’s rate limits. Some websites use the user agent HTTP header to identify specific devices and block access. Spin your rotating proxies to overcome this type of blockage.

References

https://www.blog.datahut.co/post/web-scraping-how-to-bypass-anti-scraping-tools-on-websites

https://www.zenrows.com/blog/web-scraping-without-getting-blocked

https://www.zenrows.com/blog/web-scraping-without-getting-blocked#set-real-request-headers